This commentary originally appeared on The Brown Center Chalkboard.

Head Start, the federal early childhood education program, has expanded access to preschool to eligible students in low-income families. Head Start was established in 1965 as part of President Lyndon Johnson’s War on Poverty. The goal of the program is to promote school readiness through the provision of health, educational, nutritional, and social services.

In 2019, Congress authorized more than $10 billion on Head Start. Based on the most recent data, Head Start enrolls almost 1 million children annually. This substantial investment in children is justified by evidence that early investments—and preschool in particular—change outcomes for children. The base of what we know about the effects of early childhood education on long-term outcomes is the result of experiments. Decades-spanning longitudinal studies of experimental preschool programs like HighScope/Perry Preschool and Abecedarian find those who participated in these early childhood educational interventions persist in education, have higher earnings and commit fewer crimes than the control group. New research on the intergenerational effect of Perry Preschool by Nobel laureate James Heckman and Ganesh Karapakula finds that participants were more stably married, that their children were less likely to be suspended from school, and more likely to graduate from high school and be employed. Would these experimentally determined findings from model preschool programs be replicated in Head Start? Since 1969, the federal government has asked researchers to answer the question: Does Head Start work? In 1998, Congress authorized the Department of Health and Human Services (HHS) to contract an independent national random assignment study of Head Start to determine whether Head Start improved school readiness: the Head Start Impact Study. The Head Start Impact Study followed about 5,000 3- and 4-year-olds who were randomly assigned to a treatment group (in which they had a seat in Head Start) or a control group (in which parents made their own choice without the initial offer of a Head Start seat). The study started in the fall of 2002 and continued to collect information on students through third grade, in the spring of 2007 and 2008. In 2005, the first report about the Head Start Impact Study found that one year of Head Start improved cognitive skills, but the size of the effects was small. While this first report affirmed Head Start’s impact on school readiness, the final HHS report published in 2010 showed that by the end of first grade, the effects mostly faded out. According to the 2012 HHS report on third grade follow-up, by the end of primary school there was no longer a discernible impact of Head Start. Due in part to these reports, some have concluded that while Head Start has some initial impact on kindergarten readiness, the fadeout in impact over early elementary school qualifies attempts to invest more in early childhood education. Yet, these reports are not the final word on the Head Start Impact Study, in part because of the ways in which the experiment played out in the field. New research reanalyzes the Head Start Impact Study and finds that Head Start does improve cognitive skill. Let’s take a closer look at the problems with the experiment and what we can learn from the evidence in retrospect.

The Head Start Impact Study

The Head Start Impact Study was an experiment, but it was not a lab experiment. In a lab, experimental criteria like double-blind random assignment (where neither the tester nor subject knows whether she is in the treatment or control group) can be controlled. In a large field experiment that takes place in the real world, such conditions may not hold. We can look at a handful of criteria to see the extent to which a field experiment is meeting the lab experiment conditions: assignment to the treatment or control group must be random, whether or not the child was in the treatment or control group should be unknown to the Head Start center and the parent and child, and only the treatment group can receive the treatment. While the Head Start Impact Study set out to meet two of these conditions―random assignment and no crossover between the treatment and control group―there were issues.

- “Random assignment” was not always random.

Assignment to treatment and control groups was not fully random. Random assignment in Head Start Impact Study treatment and control groups was performed at each Head Start Center in the study. A Head Start center staff-person was part of the research team and assisted the independent researcher in the randomization process and in keeping touch with study participants. One scholar on the advisory panel reported that Head Start directors in the study “were finding ways to circumvent the random assignment.” Depending on the types of students for whom the Head Start directors were bending the rules, the results of the study could be biased in a positive (taking a high ability student) or negative (taking a high-need student) direction. - A substantial share of the control group received the treatment; a substantial share of the treatment group did not.

And there were significant problems with compliance to random assignment. According to the Head Start Impact Study Technical Report, about one in six children in the control group enrolled in Head Start in the first year of the Head Start Impact Study. It is also the case that about 15% of children assigned to the treatment group did not enroll in Head Start. This is in part because the study contractors could not have “totally monitored or compelled” treatment Head Start centers to deny a seat to a child in the control group. Luckily, researchers have techniques to account for this issue, as the reanalyses described in the next section show.

Learning From Study Imperfections

The Head Start Impact Study wasn’t fully random and did not have a clean control group. If the Head Start Impact Study foundered along these critical dimensions, what can we learn from it? Counterintuitively, perhaps, Head Start Impact Study’s imperfections can teach us a lot about the effect of Head Start.

Two new studies, the first by Patrick Kline and Christopher Walters and the second by Avi Feller, Todd Grindal, Luke Miratrix, and Lindsay Page, leverage Head Start Impact Study random assignment noncompliance to identify the effect of going to Head Start against going to a different center-based preschool or to no preschool at all. Once they account for the experimental breakdowns of the Head Start Impact Study, they find that Head Start improves school readiness for children who would otherwise be in home-based care.

How do these researchers identify the causal impact of Head Start using these data? In short, by remembering that what parents want for their children mattered more for where they went to preschool than random assignment.

Imagine you are the parent of a 3-year-old and eligible to apply for a seat for your child at a Head Start center. What you want for your child matters a lot when it comes to deciding where to enroll her in preschool. Enrolling your child in Head Start may be your first choice, it may be your backup to some other preschool option, it may be competing in your mind with another childcare option, or it may be your only choice.

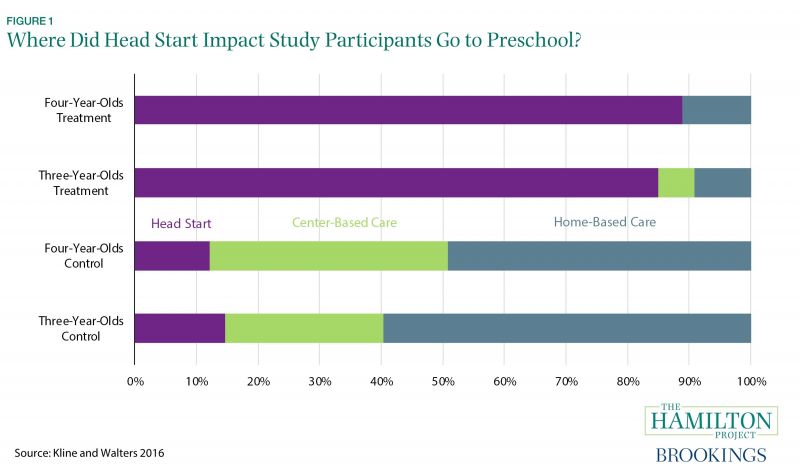

When you go to sign up for a spot at Head Start, you are also randomized into an experiment. Your underlying preference for having your child go to Head Start or not now must interact with whether or not your child has been assigned to the treatment group or the control group—i.e., whether in fact your child was offered a seat at Head Start. Depending on your prior preference, the offer of a seat (treatment) may cause you to pick Head Start for your child over other options available to you. If you were denied a seat (control) but you had your heart set on sending your child to Head Start, you might call the center director to plead your case or apply to a different center nearby. The most substantial contribution to the deterioration of the Head Start Impact Study treatment and control groups resulted from parental prerogatives: For some, what a parent wanted for their child trumped random assignment. For others, compliance with their randomly assigned role determined whether the child enrolled in Head Start or not. As data from Kline and Walters illustrate, about 15% of 3-year-olds and 21% of 4-year-olds randomly assigned to Head Start did not enroll in Head Start and about 15% of 3-year-olds and 12% of 4-year-olds experimentally denied a seat in Head Start found a way to enroll.  The interaction between random assignment in the Head Start Impact Study and actual preschool enrollment behavior creates information about whether random assignment moves a child from at-home care to Head Start or from a competing center-based care arrangement, like state pre-K, to Head Start.

The interaction between random assignment in the Head Start Impact Study and actual preschool enrollment behavior creates information about whether random assignment moves a child from at-home care to Head Start or from a competing center-based care arrangement, like state pre-K, to Head Start.

Both of these studies leverage this new information to answer the primary policy question that Congress wrote into law in 1998: does Head Start improved school readiness compared to the alternative? Given that there are two alternatives to Head Start—home-based care and other preschools—these scholars address them separately.

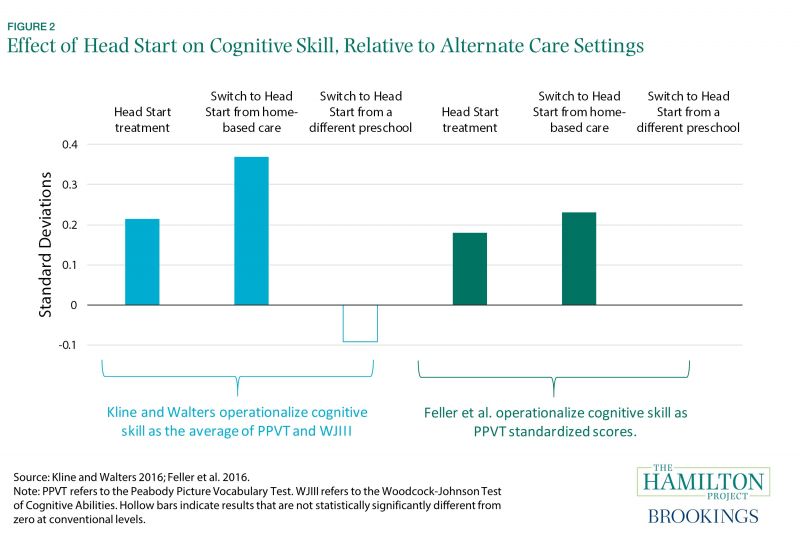

Figure 2 shows what effect Head Start has based on what the child would otherwise be getting. The Head Start treatment bars are equivalent to the techniques used in the HHS reports and show about a fifth of a standard deviation increase in cognitive skill. In both papers, if a child would otherwise be in home-based care, Head Start caused a larger increase in cognitive skill than the baseline. Head Start caused more than a third of a standard deviation increase in cognitive skill in the Kline and Walters analysis and almost a quarter of a standard deviation increase in Feller, Grindal, Miratrix, and Page. For children who otherwise would have gone to preschool, going to Head Start did not make an additional difference in cognitive skills.

Conclusion

The trend toward experiments in education research is “healthy” and welcome; but, researchers and policymakers should take into account not only the ostensible setup of a study, but whether the methods used to identify effects are rigorous. The initial Head Start reports did not account for the deterioration of the experimental ideal: Random assignment alone determines who is in the treatment and control group and only those who are in treatment group receive treatment. For Head Start, quasi-experimental studies that plausibly randomly assign children to treatment and control groups and maintain that assignment, and the new papers that leverage the Head Start Impact Study to reanalyze the collected data are more rigorous—and all find that center-based early childhood education improves school readiness and has impacts not only into adulthood, but on the next generation. Natural experiments of the effects of Head Start show that Head Start causes better health, educational, and economic outcomes over the long term as a consequence of participation, though the effect sizes are smaller than those from the model programs. Research that Diane Schanzenbach and I have published shows that the effect of Head Start extends to noncognitive skills and persists into how participants parent their children: overall and particularly among African American participants, we find that Head Start also causes social, emotional, and behavioral development that becomes evident in adulthood measures of self-control, self-esteem, and positive parenting practices. New research by Chloe Gibbs and Andrew Barr find intergenerational effects of Head Start along the same lines of the Heckman work – the children of those who were exposed to Head Start saw reduced teen pregnancy and criminal engagement and increased educational attainment. While some have taken the initial Head Start Impact Study reports at face value, the new and carefully designed reanalyses of the Head Start Impact Study teach us not only about the positive impacts of Head Start, but about research design considerations as experiments in education become more prevalent. The Head Start Impact Study reanalyses and the decades of research on Head Start show that on a variety of outcomes from kindergarten readiness to intergenerational impacts, Head Start does work, particularly for students who otherwise would not be in center-based care.

The author thanks Michael Hansen, Ryan Nunn, Lindsay Page, Diane Schanzenbach, Jay Shambaugh, and Jon Valant for thoughtful conversations and comments as well as Emily Moss and Jana Parsons for superb research assistance.